From RankNet to LambdaRank to LambdaMART: An Overview (Chris Burges)

Deriving lambda from pairwise cross-entropy

Problem: query , documents and . Predicted scores:

means should be ranked before .

where is a scalar that determines the shape of the sigmoid.

Given relevance labels for each doc (1, 0 or -1), let if , if and if .

True probability:

Cross-entropy cost:

If we take the derivative of the cross-entropy loss with respect to the predicted score :

SGD update is:

Let denote indexes such that . Therefore, always.

Summing up all contributions to the update of weight :

where

is a force attached to doc , the direction of which indicates the direction we would like the URLs to move (to increase relevance), and the length of which indicates by how much. It is computed from all the pairs in which is a member.

SGD: weights are updated after each pair.

Instead, we can use mini-batch SGD and accumulate s.

This speeds up training from ( = number of docs) to

Optimizing information retrieval measures

Information Retrieval (IR) measures as a function of model scores are discontinuous or flat. Thus, gradients are zero or undefined.

Expected reciprocal rank (inspired by a cascade model where user scrolls until it finds a result):

where is the prob that user finds item relevant.

ERR is the expected probability to arrive at the relevant item over the result length.

RankNet is optimizing a smooth, convex approximation of the number of pairwise errors (since cross-entropy penalizes )

Explanation in the LambdaRank paper:

Suppose that a ranking algorithm is being trained, and that at some iteration, for a query for which there are only two relevant documents and , A gives rank one and rank . Then on this query, has WTA (winner takes all) cost zero, but a pairwise error cost of (since is higher than docs at position . If the parameters of A are adjusted so that has rank two, and rank three, then the WTA error is now maximized (=1), but the number of pairwise errors has been reduced by . Now suppose that at the next iteration, is at rank two, and at rank ≫ 1. The change in ’s score that is required to move it to top position is clearly less (possibly much less) than the change in ’s score required to move it to top position. Roughly speaking, we would prefer to spend a little capacity moving up by one position, than have it spend a lot of capacity moving up by positions.

Cross-entropy doesn't make this distinction. It minimizes the pairwise number of errors. Rather than deriving gradients from a cost, it would be easier to write them down directly.

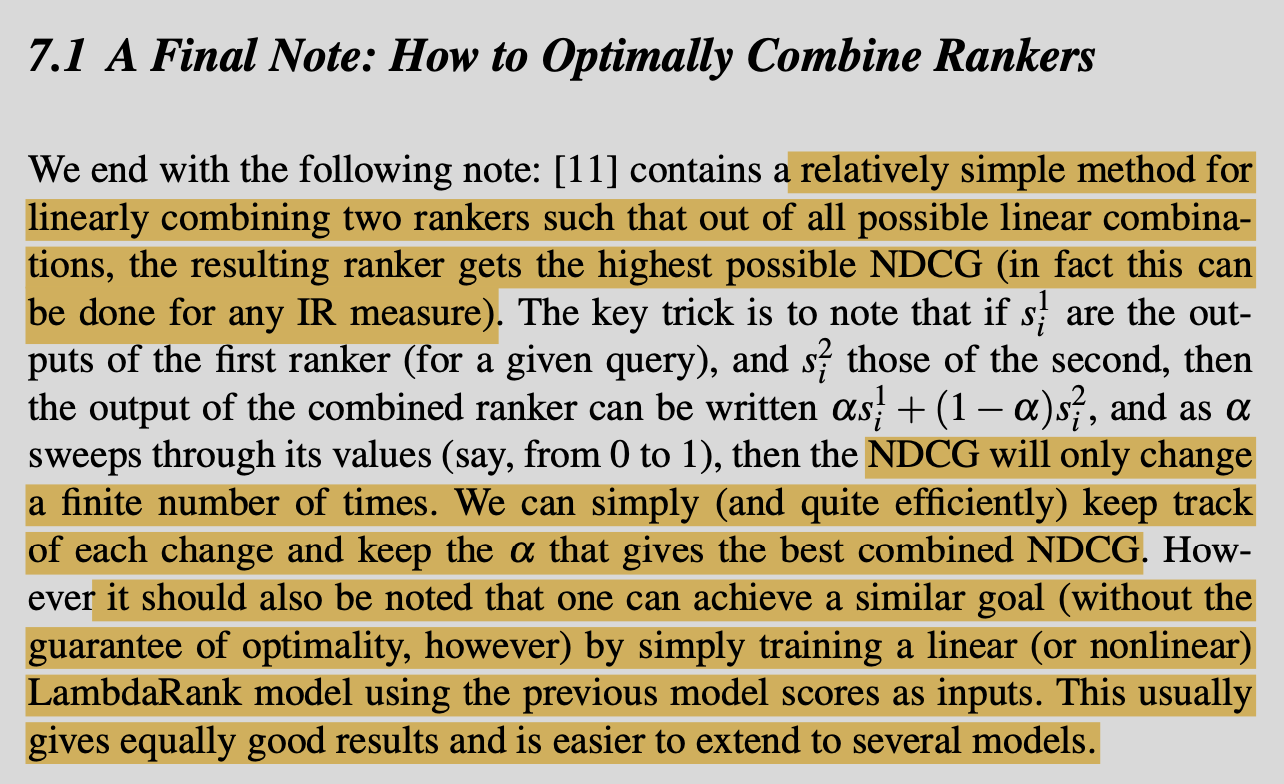

The forces s are exactly those gradients. Empirically, experiments show that simply multiplying by , the NDCG change by swapping position and gives very good results. In LambdaRank, we imagine that there is a utility such that (recall that ):

Although information retrieval measures, viewed as a function of scores are flat/discontinuous, LambdaRank bypasses this problem by computing gradients after docs have been sorted. Thanks to the value, we can give more importance to earlier ranks.

Every document pair generates an equal and opposite , and for a given document, all the lambdas are incremented (from contributions from all pairs in which it is a member, where the other member of the pair has a different relevance label). This accumulation is done for every document for a given query; when that calculation is done, the weights are adjusted based on the computed lambdas using a small (stochastic gradient) step. This speeds up training.

Empirical optimization of NDCG

Empirically, such a model optimizes NDCG directly. To optimize other metrics, just change into the appropriate measure.

Let be the average NDCG of our model over all queries. By fixing every parameter except one , we can define some gradient: where is the learned parameter. As changes, this gradient resembles little step functions.

We want to show that is a local maximum. Computing the Hessian is too computationally challenging, so instead, we choose sufficiently many random directions in weight space and check that is decreasing as we move away from the learned weights . They derive some p-value of missing an ascent direction (it's a one-sided Monte-Carlo test) to choose the minimum number of directions required.

*Can we always find a cost such that arbitrary s are its gradient?

Poincarré's Lemma states that are partial derivatives of some function (i.e. ) iff

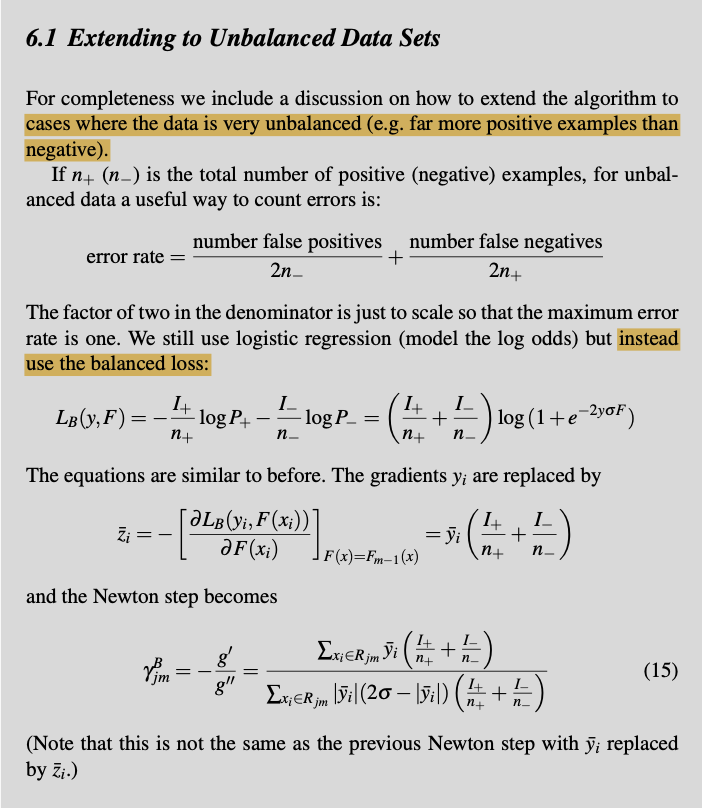

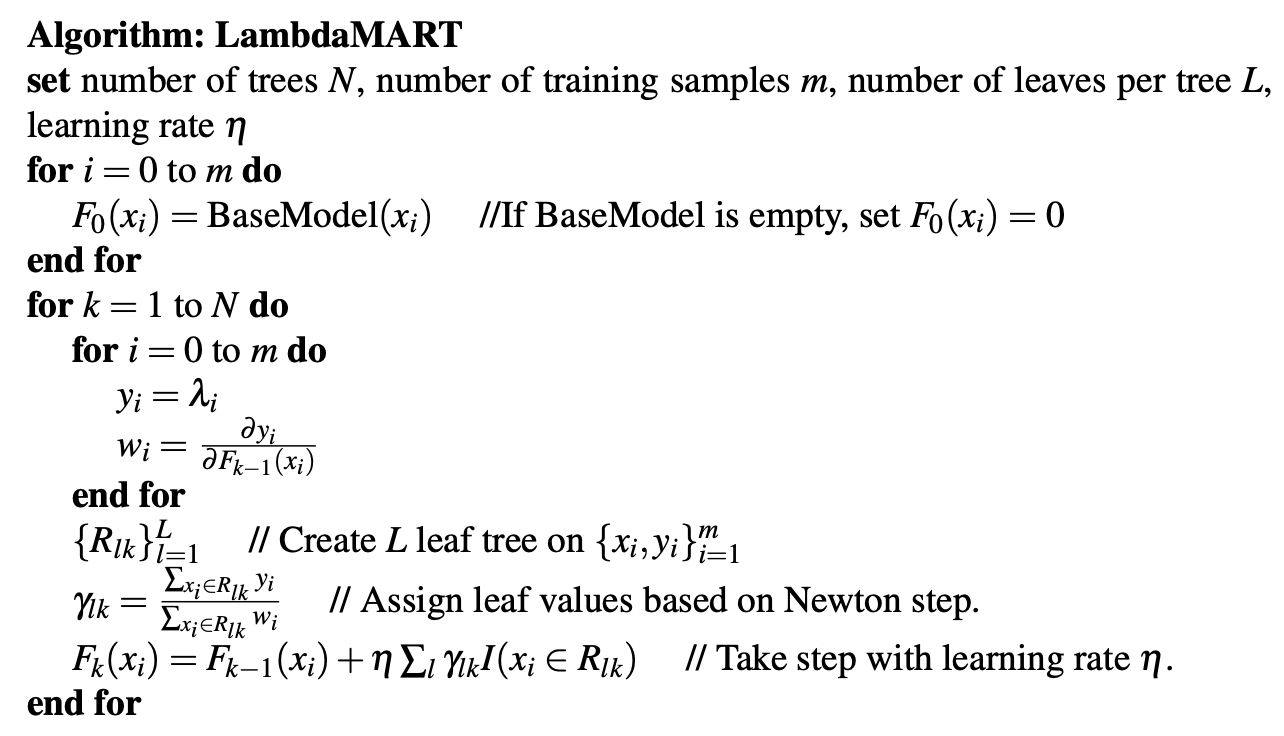

Application to MART (binary classification)

MART = gradient boosting (see notes on the gradient boosting paper)

Quick recap:

Final model is a linear combination of weak learners:

where and are learned in a greedy stagewise manner:

The update is then:

Descent direction is the gradient:

This gives the steepest descent in N dimensional data space at but it's only defined at data points and cannot be generalized to other . We take the closest least square estimate and use line search.

Base learner is a tree with terminal nodes:

where is a disjoint partition of .

where

Since are disjoint, we can optimize each independently:

For binary classification, we take a Newton step since there is no closed form solution to :

where is the loss we're optimizing:

This can be expressed with respect to the pseudoresponses:

These are the exact analogs to the Lambda gradients. We can specify the pseudo-responses (lambdas) directly to move in a certain direction.

The s are defined as:

We can find the cost for which they are the derivative:

The pseudo-responses are then just

It’s useful to compare how LambdaRank and LambdaMART update their parameters. LambdaRank updates all the weights after each query is examined. The decisions (splits at the nodes) in LambdaMART, on the other hand, are computed using all the data that falls to that node, and so LambdaMART updates only a few parameters at a time (namely, the split values for the current leaf nodes), but using all the data (since every lands in some leaf). This means in particular that LambdaMART is able to choose splits and leaf values that may decrease the utility for some queries, as long as the overall utility increases.