Model Free Control

- when MDP model is unknown but we can sample trajectories from the MDP, or

- when MDP model is known but computing value function via model-based control method is untractable

In policy iteration, we take but we can replace it with which is the discounted sum of returns, starting from state and taking action .

Policy needs to take every action with some positive probability, so that we can compute the value of each state-action pair.

-greedy policy with respect to state-action value :

We can show that the -greedy policy with respect to is a monotonic improvement on policy , meaning . See page 3, didn't understand the proof.

greedy in the limit of exploration (GLIE):

-

all state-action pairs are visited an infinite number of times

-

policy converges to the policy that is greedy with respect to the learned Q-function:

One example is decaying to zero with ( is the episode number).

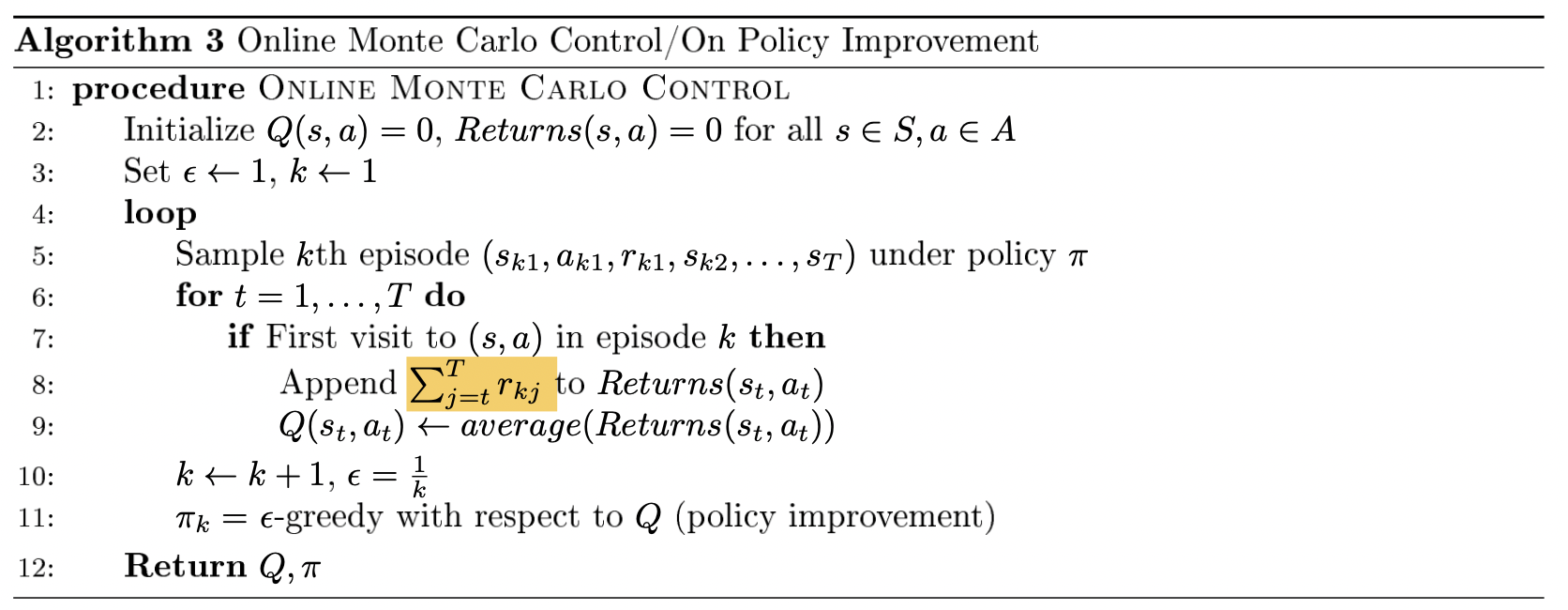

Monte Carlo Control

If the -greedy strategy is GLIE then the Q value derived will converge to the optimal Q function.

Temporal Difference Methods

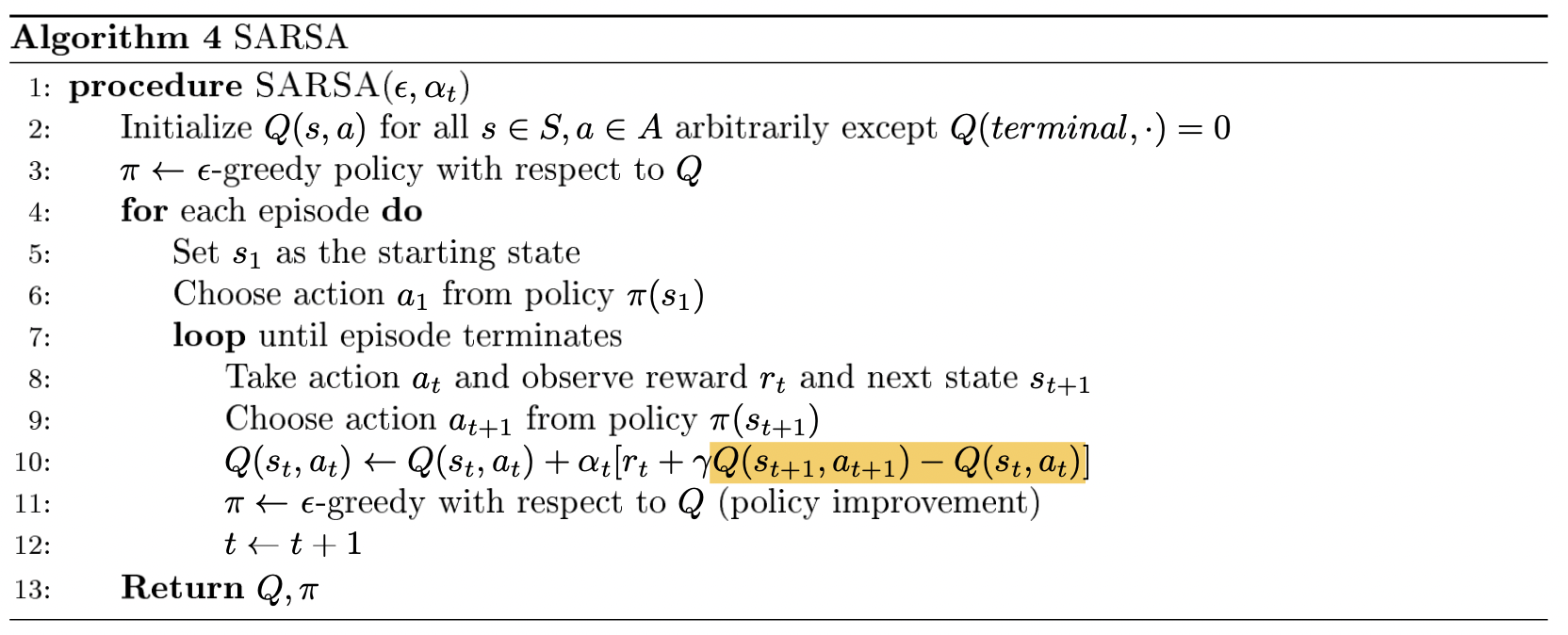

SARSA: on-policy

Called SARSA because we're using the values derived from the same policy.

SARSA converges to optimal Q if:

-

sequence of policies is GLIE

-

step-sizes satisfy the Robbins-Munro sequence such that and

Q: how do you choose action $a_{t+1}$? If we just take the arg max, isn't it Q learning?

No: the action is chosen with an espilon greedy policy.

Importance sampling: off-policy

becomes

We only use one sample vs the entire trajectory like in Monte Carlo. Thus has significantly lower variance than Monte Carlo.

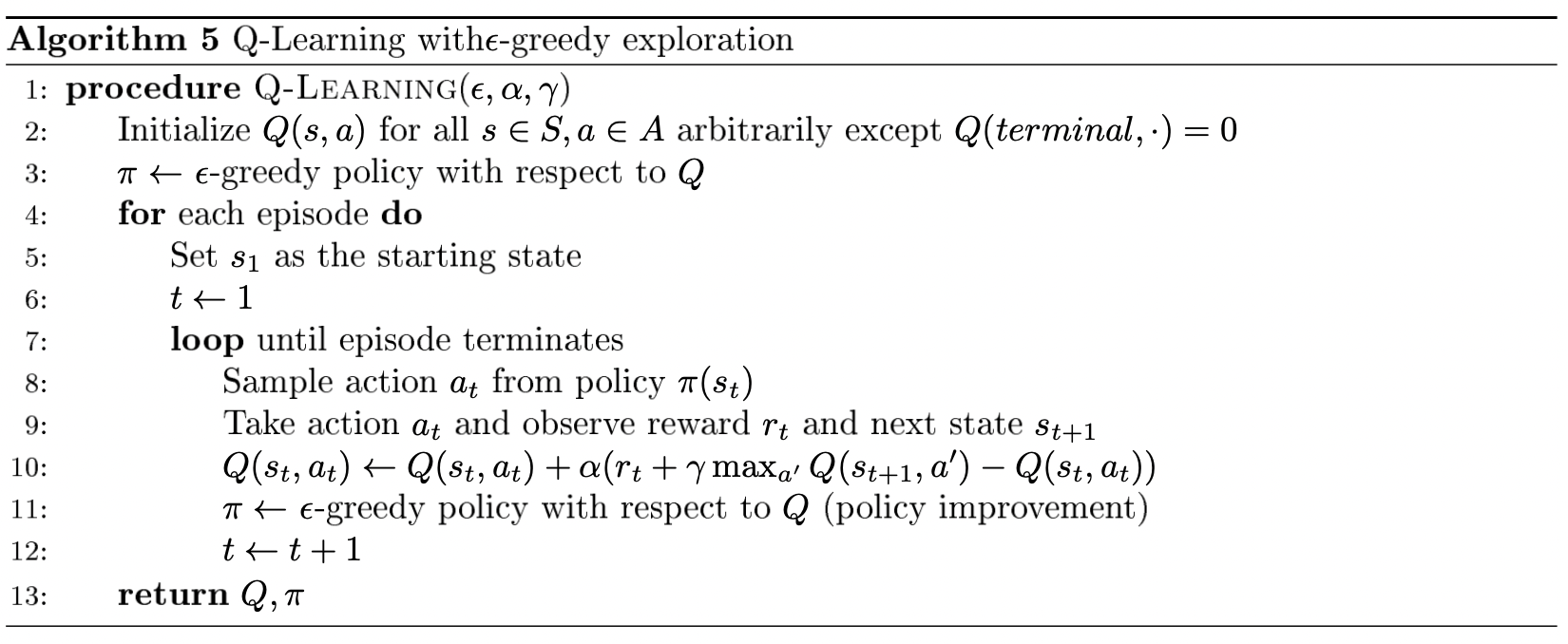

Q-learning: off-policy

SARSA update took the form:

but we can bootstrap the Q value at the next state:

Q-learning always assumes optimal behavior in the future, even while the agent is still learning. SARSA uses the actual next action taken by the policy (which may be exploratory if it's epsilon-greedy), while Q-learning uses the best possible next action according to the current Q estimate.

Why is Q-learning off-policy and SARSA on policy?

The reason that Q-learning is off-policy is that it updates its Q-values using the Q-value of the next state and the greedy action . In other words, it estimates the return (total discounted future reward) for state-action pairs assuming a greedy policy were followed despite the fact that it's not following a greedy policy.

The reason that SARSA is on-policy is that it updates its Q-values using the Q-value of the next state and the current policy's action . It estimates the return for state-action pairs assuming the current policy continues to be followed.

The distinction disappears if the current policy is a greedy policy. However, such an agent would not be good since it never explores.

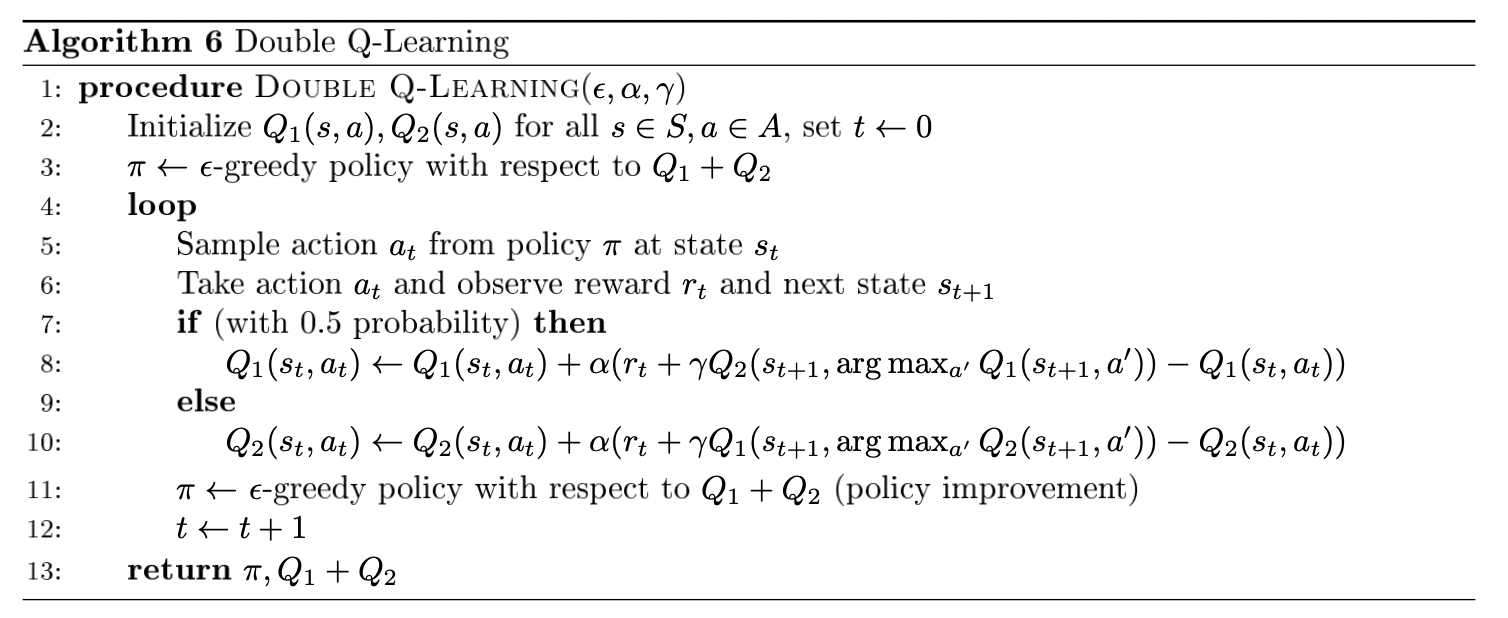

Double Q-learning

maximization bias appears when we are using our estimate to both choose the better action and estimate its value. (See coin example p.7)

Suppose two actions have mean reward . We have . Suppose we estimated state-action values via Monte-Carlo: . Let be the greedy policy w.r.t. our estimates. Thus:

by Jensen's inequality and since each estimate is unbiased.

Therefore, we're systematically overestimating the value of the state.

We can get rid of this bias by decoupling the max and estimating the value of the max. We maintain two independent unbiased estimates and . When we use one to select the maximum, we can use the other to get an estimate of the value of this maximum.

where we take the over .

Double Q-learning can significantly speed up training because it eliminates suboptimal actions faster.