TLDR:

-

factorization machines (FMs) learn linear model with interaction features, where each interaction weight is given by the dot product of latent vectors (there is one latent vector per feature). This makes the interaction weights dependent (contrary to SVMs).

-

comparison to matrix factorization (MF):

-

FMs are NOT learned with MF although MF can be mimicked by a certain specification of factorization machines.

-

FMs bridged the gap between MF and models that could incorporate additional features (e.g., context, user demographics).

-

Unlike MF, which assumes a fixed user-item matrix, FMs can model any interaction matrix derived from feature engineering.

-

FMs underperformed compared to more specialized models in RecSys, particularly when advanced MF techniques (e.g., Alternating Least Squares or Stochastic Gradient Descent-based MF) were used.

-

-

The mathematical expression of FMs is elegant in that it encompasses other model classes such as MF. However, FMs can struggle to scale efficiently to very large datasets due to the increased complexity of handling all pairwise interactions. They were eventually overtaken by neural models like NCF, which are more expressive and scalable.

Formulation

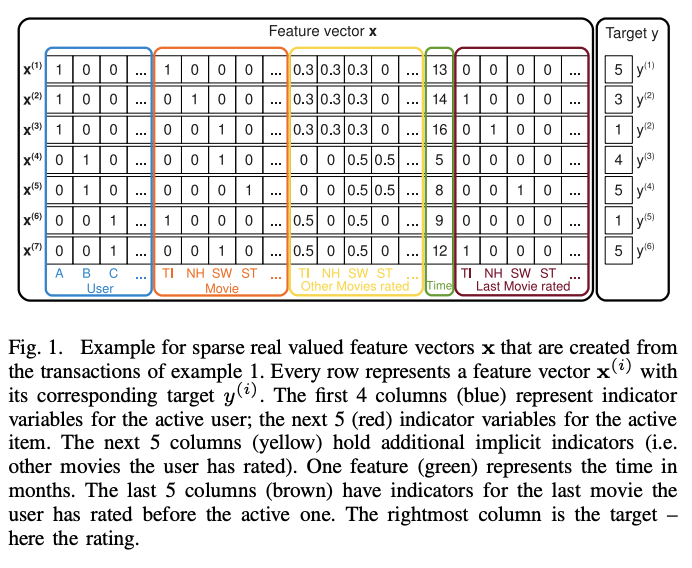

This is different than matrix factorization. Namely we are not learning over a feedback matrix, but rather over a classical dataset of pairs:

Given a feature vector , an interaction model of degree 2 is given by:

It captures all single and pairwise interactions between the features:

- is the global bias

- models the strength of the i-th variable

- represents the interaction between the i-th and j-th features and is modeled as the dot product between 2 latent vectors and ( is the latent dimension) (contrary to a linear / polynomial SVM which learns independent parameters, though sharing the same formulation)

By sharing the latent vectors and not learning independent interaction weights, we can learn high order interactions under sparsity. Factorization machines break the independence of the interaction parameters by factorizing them. The feature vector for one interaction helps estimate the feature vectors for related interactions.

In recommender systems, one reason for high sparsity is the presence of large categorical variable domains (e.g. item ids, user ids)

In the paper, they reformulate the model to compute it in linear time.

We can use different losses, depending on the prediction task:

- Regression (real target): mean squared error

- Binary classification: hinge loss (, where target ) or binary cross-entropy

- Ranking: pairwise classification loss.

Generalization to d-way interactions

For , the interaction term becomes:

just means we're summing over the element-wise product between the 3 latent vectors.

The generalized formula is ugly and given in the paper. The specificity is that they actually learn one latent matrix for each degree .

Equivalence: anecdotally, the paper shows that factorization machines can be equivalent to matrix factorization and other models when designing the proper input features. For matrix factorization, the input vector is the concatenation of a one-hot encoding of the user set and a one-hot encoding of the item set, i.e. is a vector with ones at user index and item index ( since we concatenated the 2)